Beryl Spaghetti Models

Beryl spaghetti models are a type of mathematical model used to represent the behavior of complex systems. They are based on the idea that the behavior of a system can be represented by a network of interconnected nodes, or “spaghetti strands,” which represent the different components of the system.

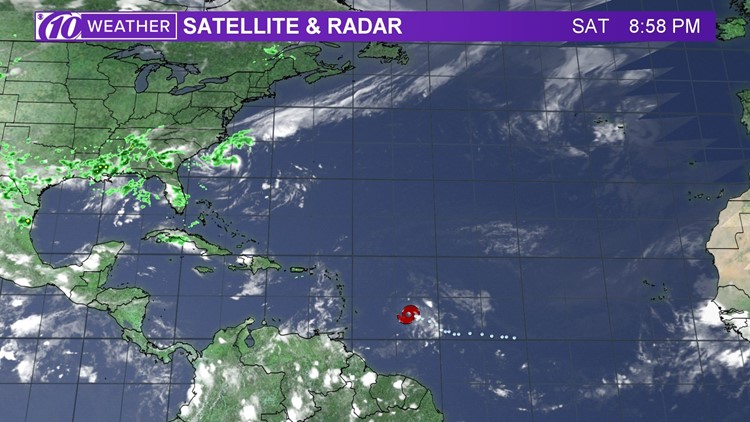

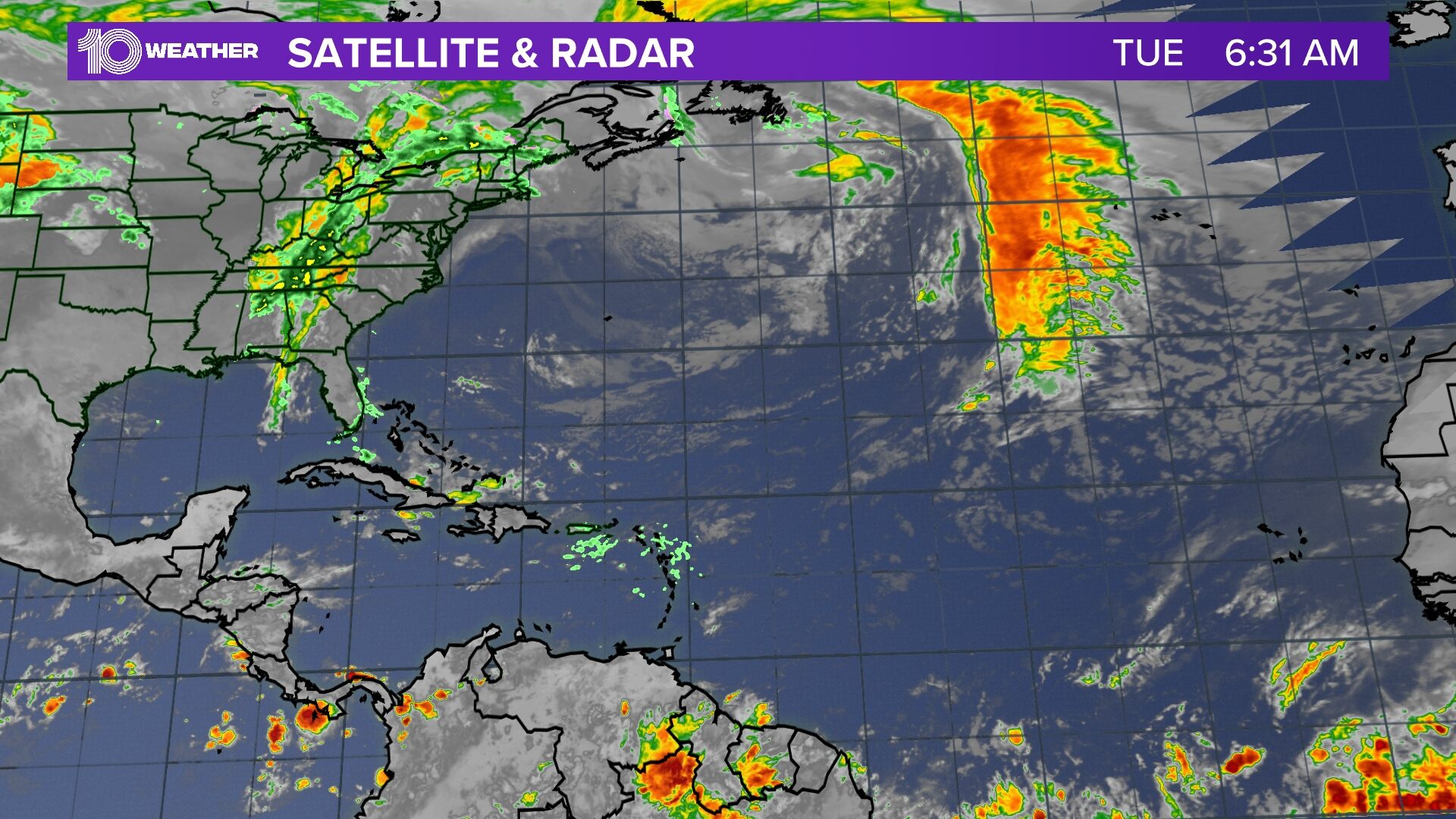

Beryl spaghetti models, with their swirling lines predicting the path of a hurricane, have become an essential tool for forecasting. Jamaica , for example, was recently battered by a hurricane that left a trail of destruction. Beryl spaghetti models helped meteorologists track the storm’s progress and issue timely warnings, allowing residents to prepare for its impact.

The first beryl spaghetti models were developed in the early 1960s by researchers at the University of California, Berkeley. These early models were used to study the behavior of biological systems, such as the human body. Over time, beryl spaghetti models have been used to study a wide variety of systems, including social, economic, and environmental systems.

The sophisticated modeling systems called beryl spaghetti models are an advanced tool for forecasting the path of hurricanes. These models provide a range of possible tracks, allowing meteorologists to anticipate the most likely direction of the storm. For the latest updates on Hurricane Beryl’s projected path, visit where is hurricane beryl headed.

By consulting these models, you can stay informed and prepared as the hurricane approaches.

Key Individuals and Institutions

Some of the key individuals involved in the development of beryl spaghetti models include:

- Jay Forrester, a professor at the Massachusetts Institute of Technology, who developed the first beryl spaghetti model in 1961.

- Dennis Meadows, a researcher at the University of New Hampshire, who co-authored the book “The Limits to Growth,” which used a beryl spaghetti model to predict the environmental consequences of continued economic growth.

- Donella Meadows, a researcher at the University of New Hampshire, who co-authored the book “The Limits to Growth” and developed the STELLA software, which is used to create beryl spaghetti models.

Some of the key institutions involved in the development of beryl spaghetti models include:

- The University of California, Berkeley, where the first beryl spaghetti models were developed.

- The Massachusetts Institute of Technology, where Jay Forrester developed the first beryl spaghetti model.

- The University of New Hampshire, where Dennis Meadows and Donella Meadows co-authored the book “The Limits to Growth” and developed the STELLA software.

Evolution of Beryl Spaghetti Models

Over time, beryl spaghetti models have evolved to become more sophisticated and accurate. Some of the key milestones in the evolution of beryl spaghetti models include:

- The development of the STELLA software in the early 1980s, which made it easier to create and use beryl spaghetti models.

- The development of new methods for analyzing beryl spaghetti models, such as sensitivity analysis and Monte Carlo simulation.

- The application of beryl spaghetti models to a wider range of systems, including social, economic, and environmental systems.

Applications and Use Cases of Beryl Spaghetti Models

Beryl spaghetti models find applications in various industries and domains, enabling organizations to solve complex problems and make informed decisions. These models offer a unique approach to data analysis and visualization, providing valuable insights into data patterns and relationships.

Financial Analysis and Risk Management

- Predicting stock market trends and identifying potential investment opportunities.

- Assessing financial risks and developing strategies to mitigate them.

- Analyzing customer spending patterns and optimizing marketing campaigns.

Healthcare and Medical Research

- Identifying patterns in patient data to improve diagnosis and treatment outcomes.

- Developing personalized treatment plans based on individual patient profiles.

- Tracking the spread of diseases and predicting future outbreaks.

Supply Chain Management and Logistics

- Optimizing inventory levels and reducing waste.

- Predicting demand and ensuring efficient distribution of goods.

- Identifying bottlenecks and improving supply chain efficiency.

Benefits of Using Beryl Spaghetti Models

- Enhanced data visualization and exploration.

- Identification of complex patterns and relationships in data.

- Improved decision-making based on data-driven insights.

Limitations of Using Beryl Spaghetti Models

- May require specialized expertise to interpret and utilize effectively.

- Can be computationally intensive for large datasets.

- May not be suitable for all types of data analysis tasks.

Methodological Foundations and Technical Aspects of Beryl Spaghetti Models

Beryl spaghetti models are grounded in the mathematical principles of graph theory and statistical modeling. They employ sophisticated algorithms to analyze complex data and identify patterns and relationships.

The underlying data structure is a directed graph, where nodes represent entities (e.g., individuals, events, or concepts) and edges represent connections or relationships between them. The graph’s topology, including node attributes, edge weights, and directionality, captures the structure and dynamics of the system being modeled.

Computational Complexity, Beryl spaghetti models

The computational complexity of running beryl spaghetti models depends on the size and complexity of the graph. For sparse graphs, the algorithms typically run in polynomial time (e.g., O(n^2) or O(n^3)), where n is the number of nodes. However, for dense graphs, the complexity can increase significantly, potentially becoming exponential (e.g., O(2^n)).

Resource Requirements

The resource requirements for running beryl spaghetti models vary depending on the size and complexity of the graph. Memory usage is primarily determined by the number of nodes and edges in the graph, while computational time is influenced by the complexity of the algorithms and the size of the input data.